Meta's Next Llama AI Models Are Training on a GPU Cluster 'Bigger ...

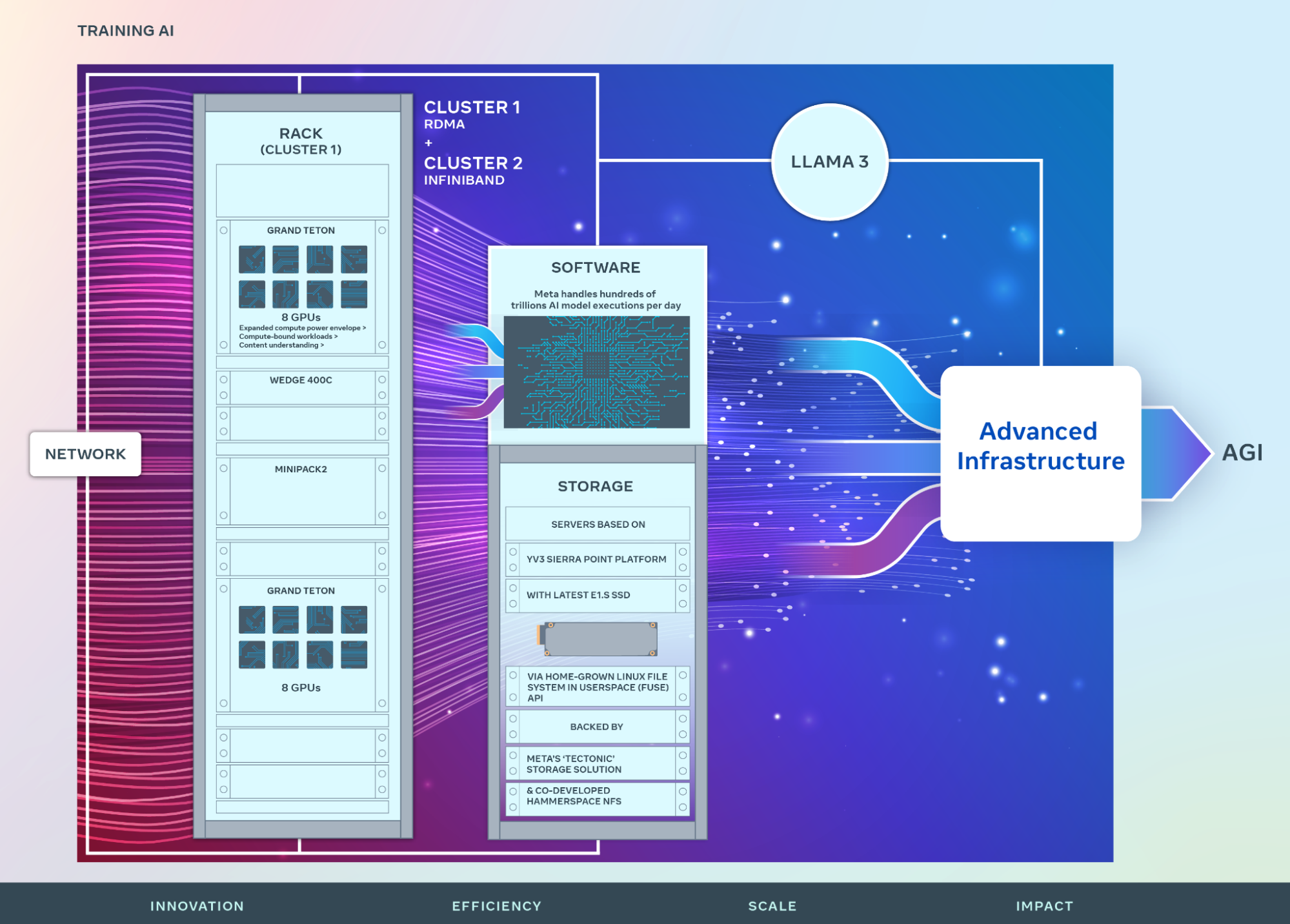

Meta CEO Mark Zuckerberg announced a significant milestone in generative AI training, revealing that the upcoming release of the company's Llama model is undergoing training on a GPU cluster of unprecedented scale. According to Zuckerberg, the cluster surpasses anything previously reported, with the development of Llama 4 well underway and an anticipated launch in the near future.

Advancements in AI Training

Zuckerberg emphasized the scale of the GPU cluster being used for training, stating that it exceeds 100,000 H100s, a popular choice for AI system training. This extensive computing power is expected to enhance the capabilities of the Llama models, with smaller versions likely to be the first ones ready for deployment.

The significance of scaling up AI training through increased computing power and data is widely recognized as crucial for the advancement of AI technologies. While Meta currently leads the field, other major players are also likely working towards utilizing compute clusters with over 100,000 advanced chips.

Collaboration with Nvidia

In collaboration with Nvidia, Meta previously shared insights into clusters comprising about 25,000 H100s that were instrumental in developing Llama 3. The powerful computing resources needed for Llama 4 development underscore the company's commitment to pushing the boundaries of AI innovation.

Despite the promising prospects of Llama 4, specific details about its advanced capabilities remain undisclosed. However, Zuckerberg hinted at new modalities, enhanced reasoning abilities, and significantly faster performance.

Open Source Model Approach

Meta's distinctive approach to AI, particularly with the Llama models, sets it apart in the competitive landscape. Unlike models from other tech giants that are accessible only through APIs, Llama models can be downloaded in full for free, attracting startups and researchers seeking more control over their AI models and data.

While Meta touts Llama as open source, certain restrictions on commercial use are in place. The company's reluctance to divulge training details adds a layer of mystery to the models, limiting external insights into their inner workings.

Engineering Challenges and Energy Consumption

Managing a vast array of chips for developing Llama 4 presents unique engineering challenges and substantial energy requirements. Estimates suggest that a cluster of 100,000 H100 chips could consume 150 megawatts of power, highlighting the energy-intensive nature of AI development.

Meta's substantial investments in data centers and infrastructure reflect its dedication to AI innovation, with an anticipated increase in capital spending for the upcoming year. Despite the considerable costs involved, Meta's revenue growth from ad sales ensures continued support for its AI endeavors.

Future Implications of Llama 4

Zuckerberg envisions the enhanced capabilities of Llama 4 powering a broader range of features across Meta services, particularly through the Meta AI chatbot available on various platforms. With over 500 million monthly users engaging with Meta AI, the potential for monetization through ads holds promising prospects for Meta's AI initiatives.

As Meta continues to push the boundaries of AI development with Llama models, the industry awaits the unveiling of Llama 4 and its potential impact on the future of AI technologies.