Google's Gemini 2.5 Pro Claims Top Spot as World's Best AI Model...

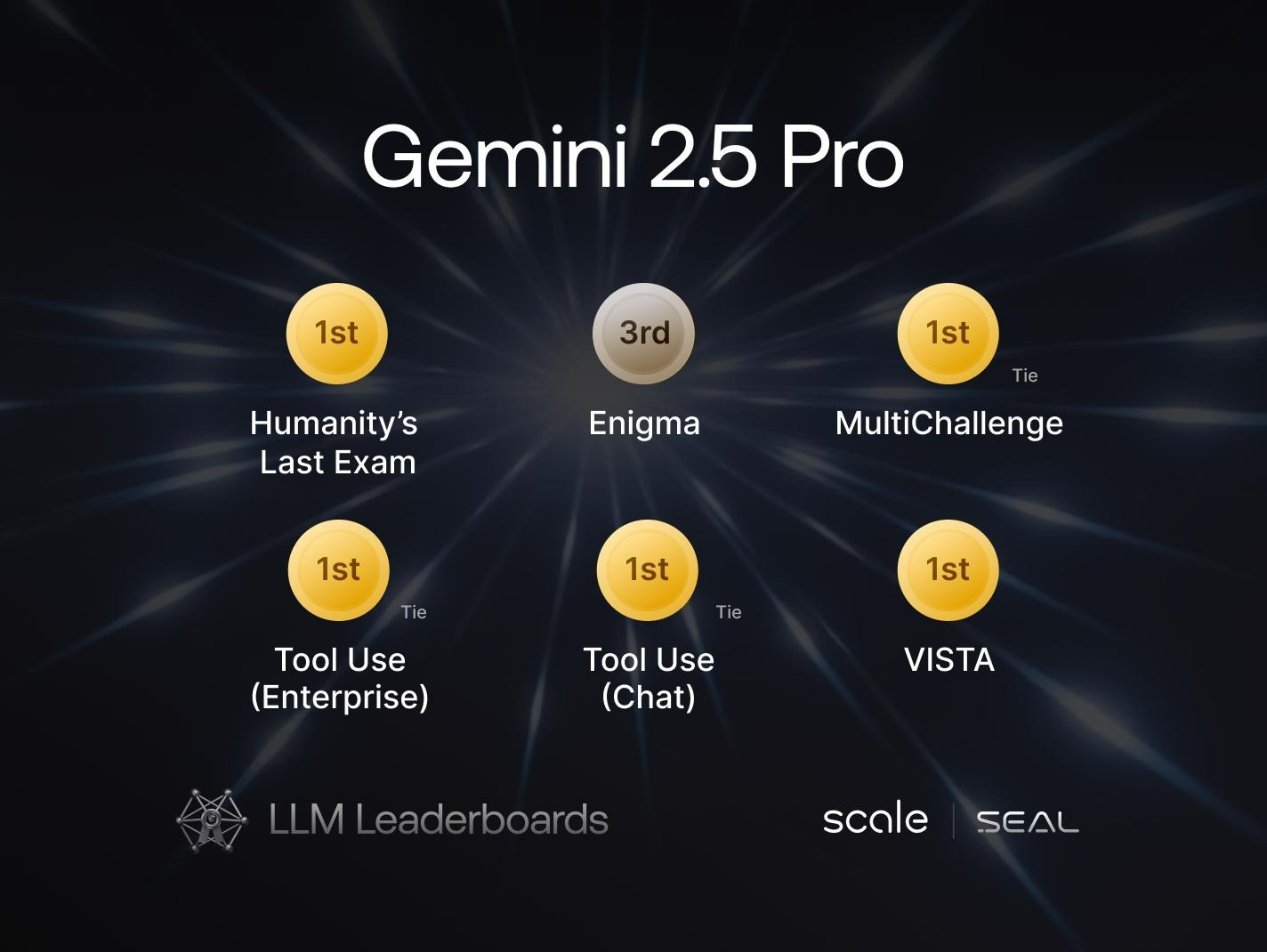

In a significant development for artificial intelligence technology, Google's latest large language model, Gemini 2.5 Pro, has officially secured the number one ranking among AI models worldwide on LiveBench.ai. The newest leaderboard evaluation reveals Gemini 2.5 Pro substantially outperforming competitors from industry leaders Anthropic and OpenAI, establishing Google as the frontrunner in the rapidly evolving AI race.

The Top 3 on LiveBench.ai

Google's experimental Gemini 2.5 Pro model has achieved a remarkable global average score of 82.35 on LiveBench.ai, significantly outpacing its closest competitors. Anthropic's Claude 3.7 Sonnet with thinking capabilities secured second place with a score of 76.10, while OpenAI's O3-mini-2025-01-31-high followed closely behind at 75.88.

The evaluation, which assessed multiple dimensions of AI performance, revealed Gemini 2.5 Pro's exceptional capabilities across various domains, with particularly outstanding results in reasoning, mathematics, and coding tasks. This multifaceted evaluation demonstrates Google's successful development of a well-rounded AI system that excels across diverse applications rather than specializing in just one area.

Unparalleled Reasoning Abilities

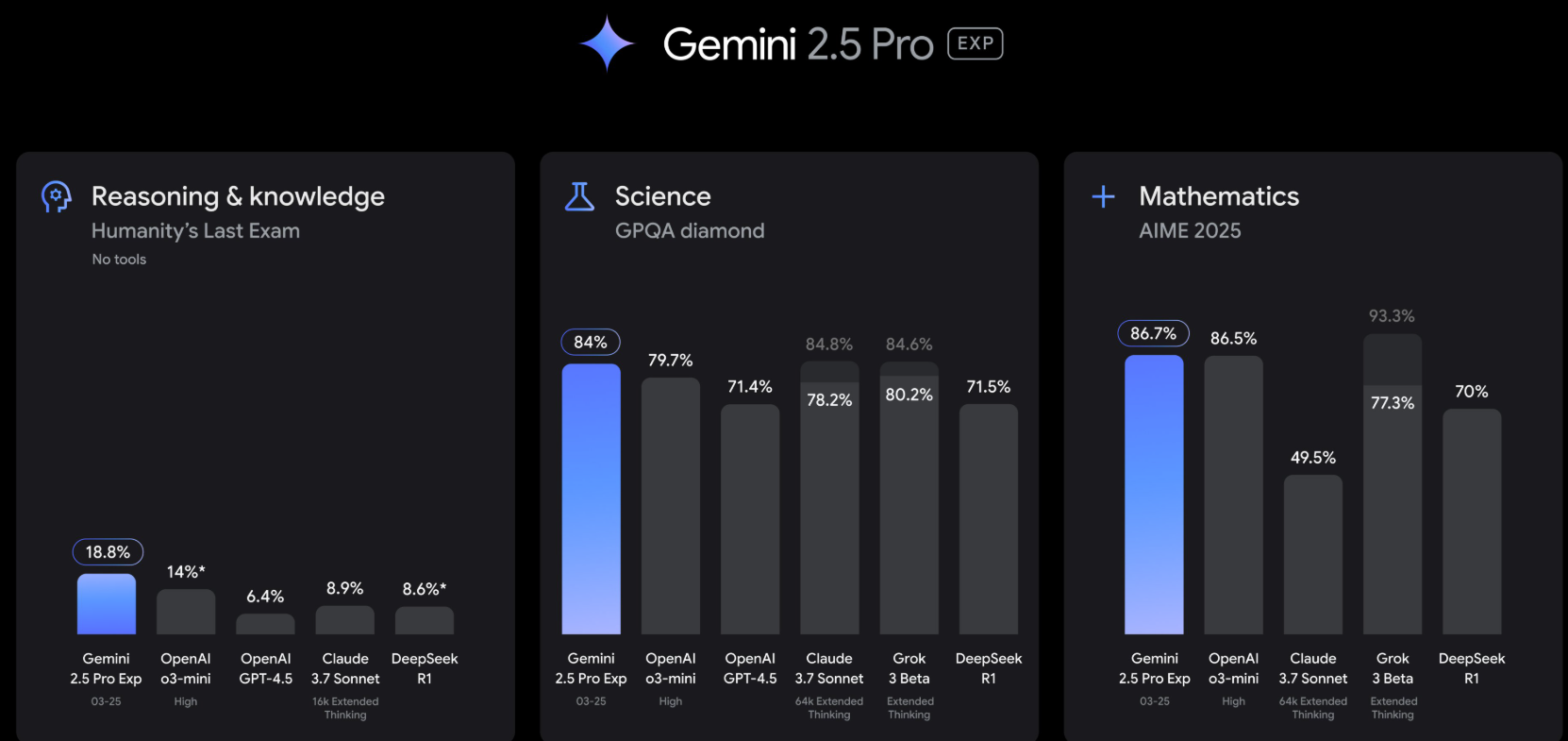

Gemini 2.5 Pro scored an impressive 89.75 in reasoning tasks, demonstrating superior logical and analytical thinking capabilities compared to competing models.

Mathematical Excellence

With a score of 90.20 in mathematics, Gemini 2.5 Pro shows exceptional mathematical problem-solving abilities, establishing it as the premier AI for complex calculations and numerical analysis.

Superior Coding Performance

Achieving 85.87 in coding-related tasks positions Gemini 2.5 Pro as an invaluable tool for programming assistance and software development.

Competitive Edge in Technical Applications

The combination of strengths in reasoning, mathematics, and coding gives Gemini 2.5 Pro a distinct advantage for technical and analytical applications where precision and logical processing are essential.

Gemini 2.5 Pro's ascendance to the top position reflects several key developments in AI model architecture and training methodologies. Its exceptional performance in reasoning and mathematics suggests significant advancements in the model's ability to handle complex logical structures and mathematical operations—traditionally challenging areas for language models.

.jpg)

While Gemini 2.5 Pro scored lowest in language tasks among its evaluation categories, this score still exceeds the language capabilities of competing models, indicating that Google has achieved improvements across the full spectrum of AI capabilities. This well-rounded performance is particularly impressive given the usual trade-offs between different types of AI tasks.

The model's solid performance in data analysis further enhances its utility for business intelligence and research applications, positioning it as an effective tool for extracting insights from complex datasets. Combined with its instruction-following capabilities (IF Average: 80.59), Gemini 2.5 Pro demonstrates good alignment with user intent, though there remains some room for improvement compared to competitors in this specific area.

What makes Gemini 2.5 Pro's achievement particularly notable is the magnitude of its lead. With a global average score approximately 6 points higher than its nearest competitor, the gap represents a substantial leap rather than an incremental improvement, suggesting that Google has implemented fundamental advancements in its AI architecture or training methodology.

Gemini 2.5 Pro's score of 90.20 in mathematics represents one of the highest ratings ever recorded for an AI model in this category, approaching the theoretical upper limits of current evaluation frameworks.

Despite leading overall, Gemini 2.5 Pro still shows room for improvement in instruction following (IF Average), where both Anthropic and OpenAI models demonstrate slightly stronger performance.

The evaluation results suggest that we may be entering a new phase of AI development where balanced, general-purpose models are beginning to outperform more specialized systems across multiple domains.

Google's breakthrough with Gemini 2.5 Pro comes after a period when many industry observers had positioned OpenAI and Anthropic as the leading innovators in the large language model space.

The substantial performance gap between Gemini 2.5 Pro and its competitors indicates that the pace of advancement in AI capabilities continues to accelerate, with significant improvements occurring within increasingly shorter development cycles.

This latest development signals an intensifying competition among major AI labs and suggests that the landscape of AI leadership may continue to shift rapidly as research breakthroughs translate into more capable models.