Meta discusses the current state and outlook for open hardware for AI

Demand for AI hardware is on the rise with each passing year, and Meta has responded by expanding its clusters for training AI by a significant 16 times in 2023. This growth trend is expected to continue in the future. In addition to bolstering computing power, Meta recognizes the importance of increasing network capacity by over 10 times.

To keep pace with this rapid expansion, Meta is actively investing in open hardware. The company believes that embracing openness is the most efficient and effective approach. Meta states that investing in open hardware will not only maximize the potential of AI but also foster continuous innovation. As part of this commitment, Meta has unveiled three groundbreaking innovations including a cutting-edge open rack design, a new AI platform, and advanced network fabric and components.

Meta's 'Catalina' High-Performance Rack

Meta's high-performance rack, named 'Catalina,' is specifically designed for AI workloads. Catalina is equipped to support the latest NVIDIA GB200 Grace Blackwell superchip as of October 2024. With a power capacity of up to 140kW and features like liquid cooling, Catalina is poised to meet the escalating demands of AI infrastructure reliably. The rack's design emphasizes modularity, allowing users to customize it for specific AI workloads.

Meta's Next-Generation AI Platform 'Grand Teton'

Meta's next-generation AI platform, 'Grand Teton,' initially introduced in 2022, has undergone an update. Grand Teton is a comprehensive monolithic system that integrates power, control, computing, and fabric interfaces for seamless deployment and efficient scaling of large-scale AI inference workloads. The latest enhancements include support for the new AMD Instinct MI300X along with improvements in computing power, memory size, and network bandwidth.

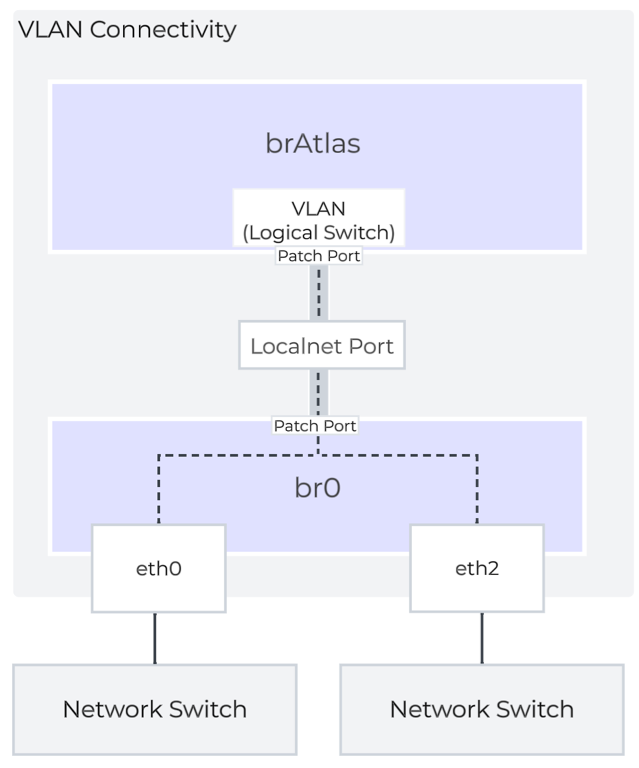

Open, Vendor-Independent Network Backend

Meta has developed a vendor-independent network backend known as the Disaggregated Scheduled Fabric (DSF). This distributed network architecture aims to deliver scalable, high-performance networks to meet the demands of data-intensive applications such as AI and machine learning. Meta has introduced a new 51T fabric switch based on Broadcom and Cisco ASICs, along with a NIC module named FBNIC.

Furthermore, Meta is collaborating with Microsoft to promote open innovation. Together, they are working on a new distributed power rack called 'Mount Diablo,' which is designed to increase the number of AI accelerators per rack and represents a significant advancement in AI infrastructure.

Meta emphasizes the importance of open hardware frameworks in complementing open software frameworks to provide the high-performance, cost-effective, and adaptable infrastructure necessary for advancing AI. The company encourages participation in the Open Compute Project community to drive further innovation in this space.