Google Updates AI Model, Enables Long-Context Understanding

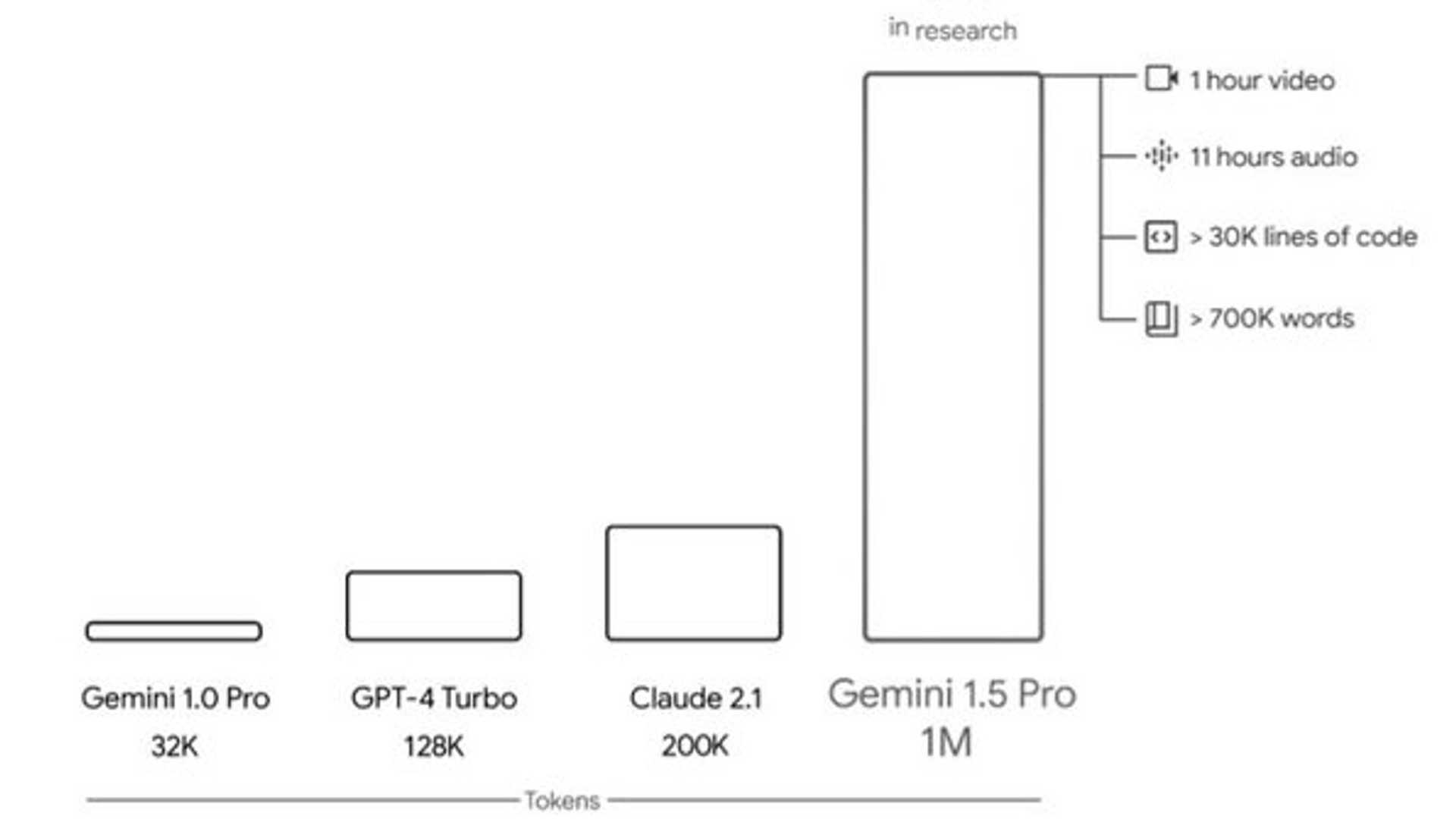

Months after Google made its Gemini AI model public, it has rolled out a new version that can handle significantly more audio, video, and text input compared to OpenAI's GPT-4. Google’s latest iteration of its Gemini AI technology, Gemini 1.5, showcases remarkable improvements in various aspects. The upgraded Gemini 1.5 Pro now achieves similar quality to 1.0 Ultra but with lesser computing power.

Demonstrating these enhancements, Demis Hassabis, the CEO of Google DeepMind, along with Alphabet CEO Sundar Pichai, introduced the new model in a blog post on behalf of the Gemini team. In the post, Hassabis elaborated on the ongoing efforts to optimize the technology, enhance latency, reduce computational requirements, and elevate the overall user experience.

Comparing the enhanced input capacity of the technology to a person's working memory, Hassabis drew on his background as a neuroscientist to highlight the significance of this advancement. The core capabilities of the updated model unlock a wide range of possibilities for its applications.

Memory Integration in AI Models

Recently, memory retention in AI models has become a focal point of discussion, particularly with OpenAI testing a feature that enables ChatGPT to maintain information from previous interactions. This memory feature operates similarly to how search engines and e-commerce platforms store user-provided information for future interactions.

According to Captify CPO Amelia Waddington, integrating memory into ChatGPT could offer valuable benefits for the retail sector, aiding in personalized product recommendations based on past user interactions.

Empowering Developers with Gemini Pro

The release of Gemini Pro to developers via AI Studio and Google’s Vertex AI cloud platform API signifies a significant advancement. Developers can now leverage new tools to integrate Gemini into their applications effectively, including capabilities for processing video and audio content.

Moreover, Google has introduced additional Gemini-powered features to its web-based coding tool, empowering developers with enhanced AI debugging and testing functionalities. The latest version of Gemini 1.5 Pro excels in analyzing, classifying, and summarizing vast amounts of content within a specified context.

For instance, Hassabis provided an example where the model analyzed the 402-page transcripts from Apollo 11's moon mission, showcasing its ability to grasp conversations, events, and details from lengthy documents. The technology also boasts advanced understanding and reasoning capabilities across different media formats, such as video.

Furthermore, when presented with a 44-minute silent Buster Keaton movie, the model can dissect plot points, events, and intricate details that might escape casual observation. It also demonstrates improved problem-solving abilities when handling extensive code blocks, offering insights, suggestions, and explanations for complex programming scenarios.