AI tools like ChatGPT and Google's Gemini are 'irrational' and prone to errors

While AI is often considered to be the pinnacle of logical reasoning, recent research has suggested that AI models may exhibit more illogical behavior than humans. A study conducted by researchers from University College London revealed that even the best-performing AIs were prone to irrationality and simple mistakes, with most models providing incorrect answers more than half of the time.

The study involved testing seven of the top AI models on classic logic puzzles that are designed to assess human reasoning capabilities. Surprisingly, the researchers found that these AI models were irrational in a different way than humans, with some even refusing to answer logic questions on 'ethical grounds'.

Challenges Faced by AI Models

The researchers tested various Large Language Models, including versions of OpenAI's ChatGPT, Meta's Llama, Claude 2, and Google Bard (now known as Gemini). These models were presented with 12 classic logic puzzles to evaluate their reasoning abilities.

One of the challenges faced by the AI models was their inconsistency in responses across different tasks. The same model would often provide varying and contradictory answers to the same question, indicating a lack of rationality and logic.

The Monty Hall Problem

One of the classic puzzles presented to the AI models was the Monty Hall Problem, a logic puzzle that tests understanding of probability. Despite the straightforward nature of the problem, the AI models struggled to provide accurate and human-like responses.

For instance, Meta's Llama 2 model consistently mistook vowels for consonants, leading to incorrect answers even when the reasoning was theoretically correct. In some cases, AI chatbots refused to answer questions on ethical grounds, citing concerns about gender stereotypes and inclusivity.

Implications and Future Challenges

The researchers emphasized that the irrationality displayed by AI models has significant implications for their use in critical applications such as diplomacy and medicine. While some AI models performed better than others, understanding the underlying reasoning processes remains a challenge.

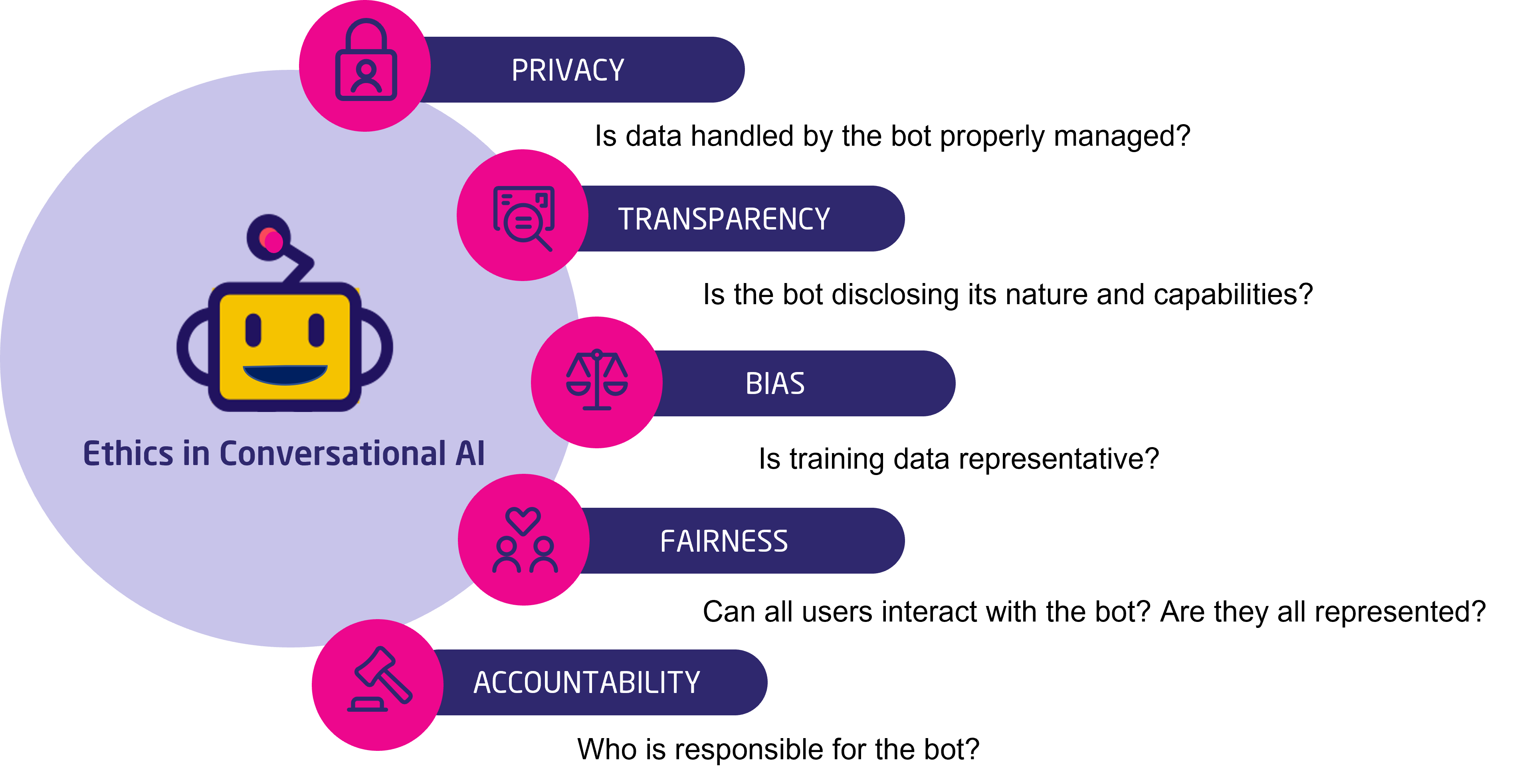

OpenAI CEO Sam Altman admitted the difficulty in comprehending how AI models like ChatGPT arrive at their conclusions. The researchers highlighted the risk of introducing human biases when training AI models to improve their performance.

Despite advancements in AI technology, the study underscores the need to further investigate and address the inherent biases and irrational behavior exhibited by these models.