ChatGPT's latest model may be a regression in performance ...

According to a new report from Artificial Analysis, OpenAI’s flagship large language model for ChatGPT, GPT-4o, has significantly regressed in recent weeks, putting the state-of-the-art model’s performance on par with the far smaller, and notably less capable, GPT-4o-mini model.

Concerns and Analysis

This analysis comes less than 24 hours after the company announced an upgrade for the GPT-4o model. OpenAI claimed improvements in creative writing ability and working with uploaded files, promising deeper insights and more thorough responses.

However, doubts have been raised regarding these claims. According to Artificial Analysis, independent evaluations show a decrease in performance compared to the August release of GPT-4o. The Artificial Analysis Quality Index dropped from 77 to 71, aligning it with GPT-4o mini's performance.

Performance Metrics

GPT-4o’s performance on the GPQA Diamond benchmark decreased from 51% to 39%, while its MATH benchmarks decreased from 78% to 69%. Interestingly, the model's response speed has more than doubled, now producing around 180 tokens per second compared to 80 tokens per second previously.

Recommendations and Conclusion

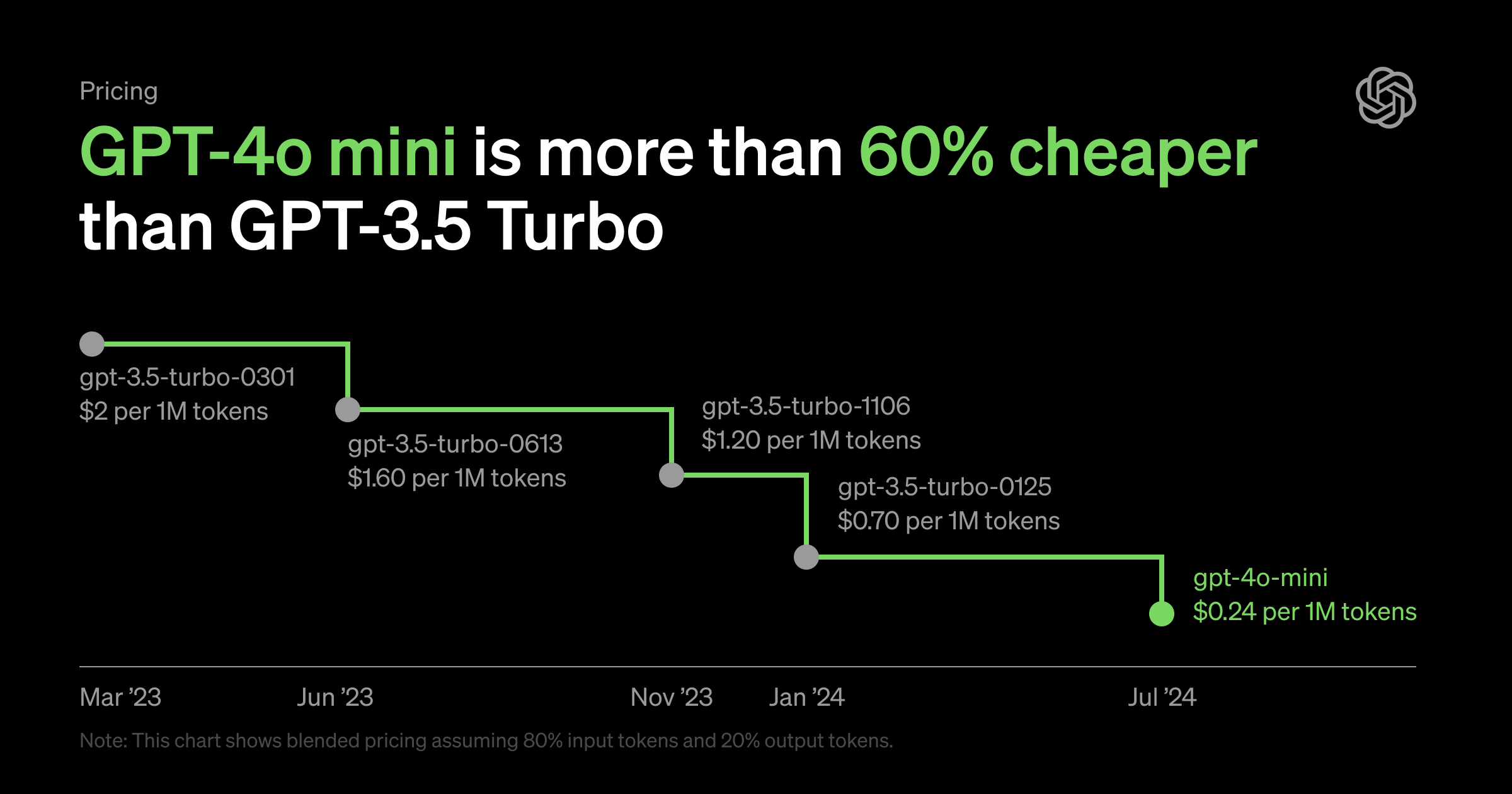

Based on the data, researchers suggest that the November 20th GPT-4o model may be a smaller version than the August release. Despite this regression, OpenAI has not revised prices. Developers are advised not to transition workloads from the August version without thorough testing.

About GPT-4o

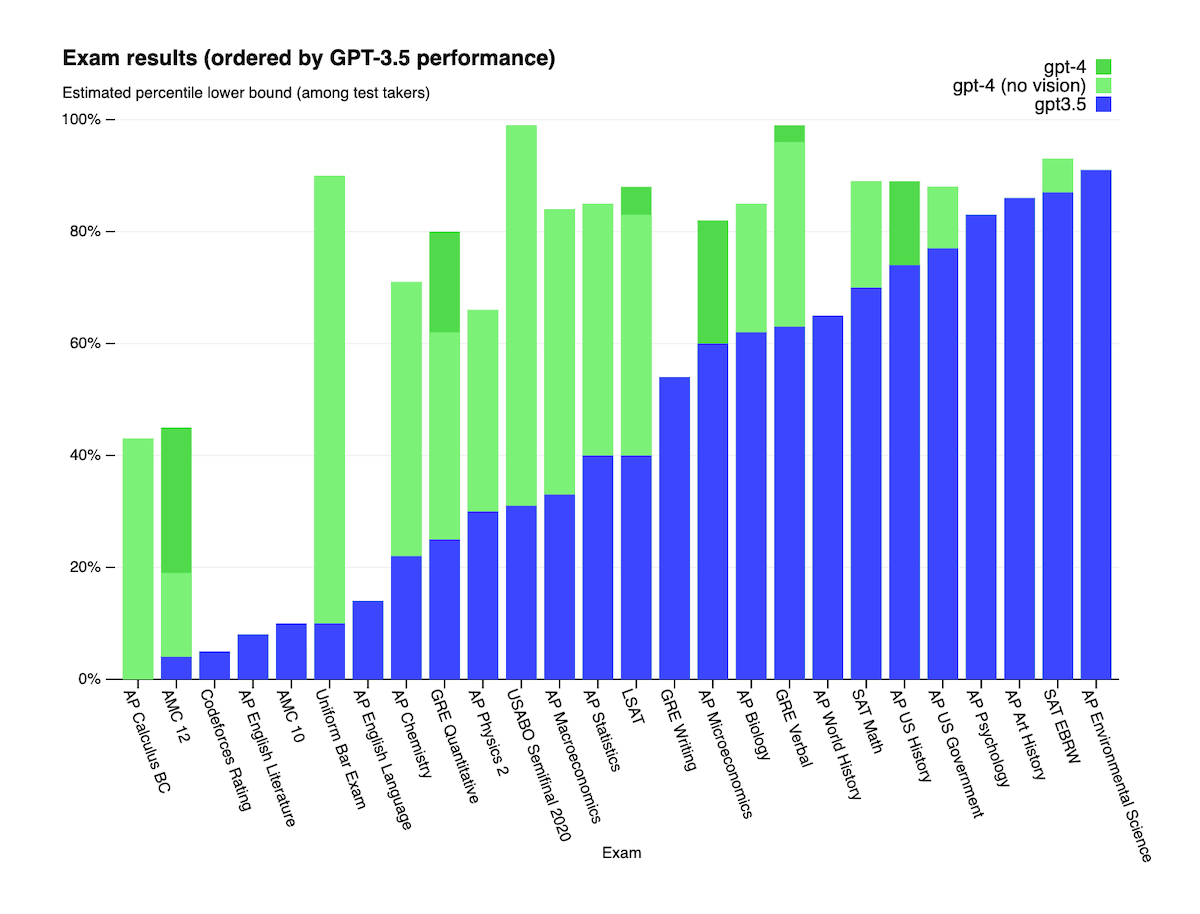

GPT-4o was introduced in May 2024 to outperform existing models like GPT-3.5 and GPT-4. It boasts cutting-edge results in voice, multilingual, and vision tasks, making it suitable for advanced applications such as real-time translation and conversational AI.