Meta's AI video model Segment Anything Model 2 AI lets you add...

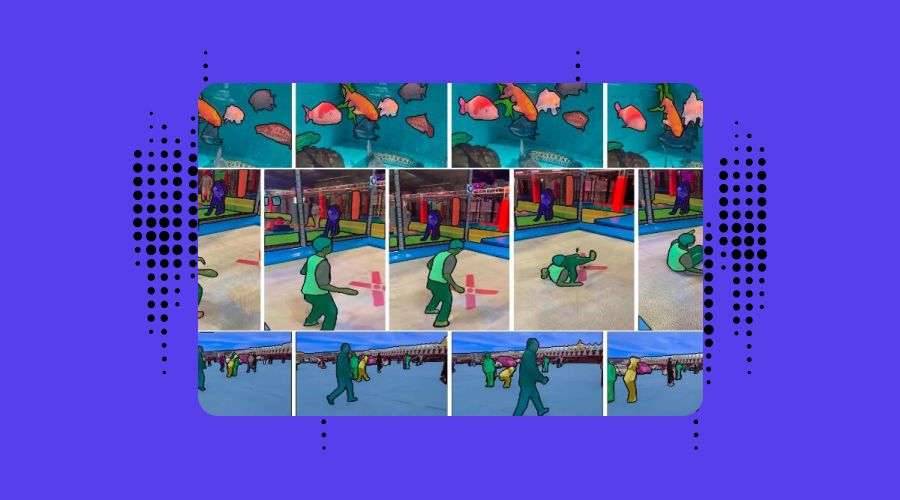

Meta has recently unveiled its latest AI model known as Segment Anything Model 2, or SAM 2. This new model is designed to identify specific pixels belonging to an object within videos. Building upon its previous Segment Anything Model, released last year, Meta's SAM 2 has already played a significant role in the development of features on platforms like Instagram, including 'Backdrop' and 'Cutouts'.

According to Meta, SAM 2 is primarily geared towards video media applications. The company claims that this new model has the capability to segment any object within an image or video, consistently tracking it across all frames in real-time.

Diverse Applications of SAM 2

Aside from its relevance in social media and mixed reality scenarios, Meta has highlighted additional applications of its segmentation models. The previous version was utilized in oceanic research, disaster relief efforts, and cancer screening initiatives. With SAM 2, Meta aims to enhance the process of annotating visual data for training computer vision systems, including those used in autonomous vehicles.

Moreover, SAM 2 opens up new avenues for selecting and interacting with objects in live videos and real-time settings, providing users with creative possibilities.

Industry Recognition

Meta CEO Mark Zuckerberg engaged in discussions regarding SAM 2 with Nvidia CEO Jensen Huang, highlighting the scientific and technological implications of this new AI model. TechCrunch reported on the noteworthy collaboration between the two industry leaders.

For those interested in delving deeper into the complexities of the digital world, The Interface podcast offers insights from business leaders and scientists shaping tomorrow's innovations. The podcast is available on platforms such as YouTube, Apple Podcasts, and Spotify.

Disclaimer: This article is a publication of THG PUBLISHING PVT LTD. and its affiliated companies. All rights reserved.