Are Brains and AI Converging?—an excerpt from 'ChatGPT and the Future of AI: The Deep Language Revolution'

In his new book, to be published next week, computational neuroscience pioneer Sejnowski tackles debates about AI’s capacity to mirror cognitive processes. In the latter half of the twentieth century, physics coasted along on discoveries made in the first half of the century. The theory of quantum mechanics gave us insight into secrets of the universe, which led to a cornucopia of practical applications. Then physics took on another grand challenge—complexity. This included complex natural systems, such as ecosystems and climate, as well as human-made systems, such as economic markets and transportation systems. The human brain and the social systems that humans inhabit are the ultimate complex systems.

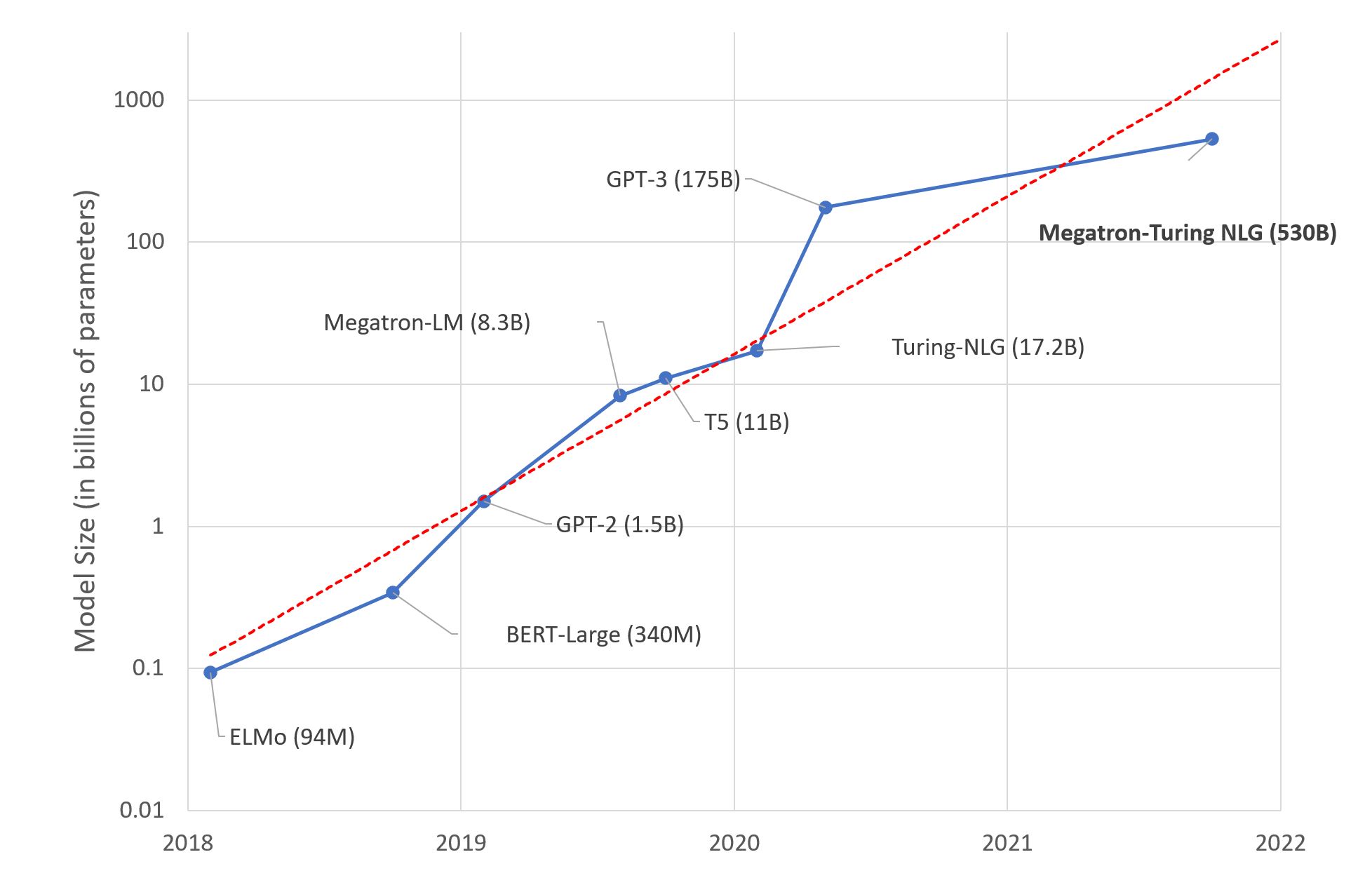

Indeed, the brain’s complexity inspired the development of artificial neural networks, which aimed to solve problems by learning from data, just as we learn from experience. This deep learning has since made enormous contributions to science and was recognized this month with the Nobel Prizes in Physics and Chemistry. We are at the beginning of a new era in science fueled by big data and exascale computing. What influence will deep learning have on science in the decades ahead?

Origins of Large Language Models

My new book, “ChatGPT and the Future of AI: The Deep Language Revolution,” takes a look at the origins of large language models and the research that will shape the next generation of AI. This excerpt describes how the evolution of language influenced large language models and explores how concepts from neuroscience and AI are converging to push both fields forward.

I once attended a symposium at Rockefeller University that featured a panel discussion on language and its origins. Two of the discussants who were titans in their fields had polar opposite views: Noam Chomsky argued that since language was innate, there must be a “language organ” that uniquely evolved in humans. Sydney Brenner had a more biological perspective and argued that evolution finds solutions to problems that are not intuitive. Famous for his wit, Brenner gave an example: instead of looking for a language gene, there might be a language suppressor gene that evolution decided to keep in chimpanzees but blocked in humans.

The Brain Mechanisms and Language Evolution

Equally important were modifications made to the vocal tract to allow rapid modulation over a broad frequency spectrum. The rapid articulatory sequences in the mouth and larynx are the fastest motor programs brains can generate. These structures are ancient parts of vertebrates that were refined and elaborated by evolution to make speech possible.

Learning and Predictions in AI and Brains

LLMs are trained to predict the next word in a sentence. Why is this such an effective strategy? In order to make better predictions, the transformer learns internal models for how sentences are constructed and even more sophisticated semantic models for the underlying meaning of words and their relationships with other words in the sentence. The models must also learn the underlying causal structure of the sentence. What is surprising is how so much can be learned just by predicting one step at a time. It would be surprising if brains did not take advantage of this “one step at a time” method for creating internal models of the world.