Meet the Supercomputer that runs ChatGPT, Sora & DeepSeek on Azure

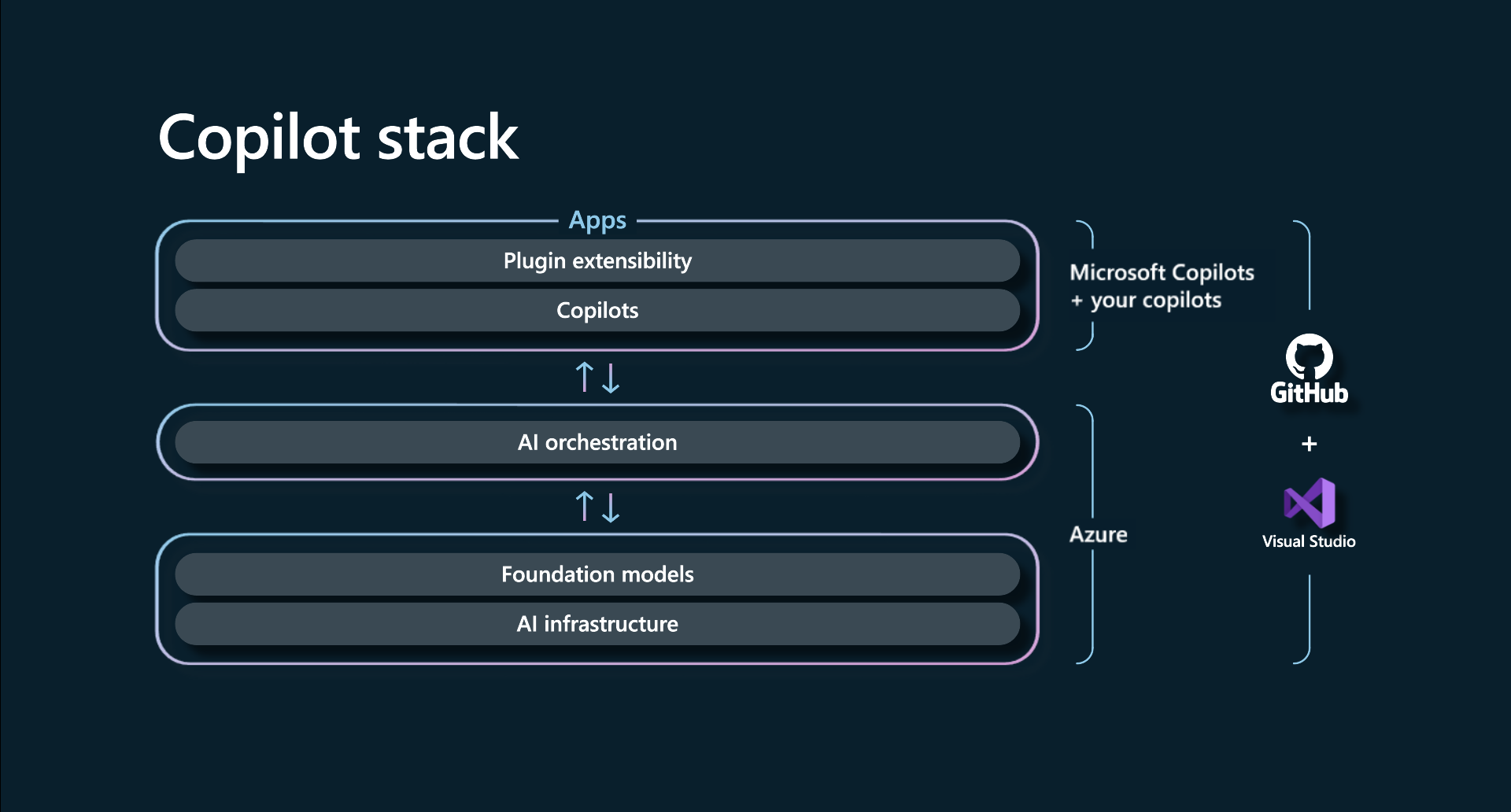

Orchestrate multi-agent apps and high-scale inference solutions using open-source and proprietary models, no infrastructure management needed. With Azure, connect frameworks like Semantic Kernel to models from DeepSeek, Llama, OpenAI’s GPT-4o, and Sora, without provisioning GPUs or writing complex scheduling logic. Just submit your prompt and assets, and the models do the rest.

Azure’s Model as a Service

Using Azure’s Model as a Service, access cutting-edge models, including brand-new releases like DeepSeek R1 and Sora, as managed APIs with autoscaling and built-in security. Whether you’re handling bursts of demand, fine-tuning models, or provisioning compute, Azure provides the capacity, efficiency, and flexibility you need. With industry-leading AI silicon, including H100s, GB200s, and advanced cooling, your solutions can run with the same power and scale behind ChatGPT.

Mark Russinovich, Azure CTO, Deputy CISO, and Microsoft Technical Fellow, joins Jeremy Chapman to share how Azure’s latest AI advancements and orchestration capabilities unlock new possibilities for developers.

Use multiple LLMs, agents, voice narration, and video. Fully automated on Azure. Start here.

Use parallel deployments and benchmarked GPU infrastructure for your most demanding AI workloads. Watch here.

Get the performance of a supercomputer for larger AI apps, while having the flexibility to rent a fraction of a GPU for smaller apps. Check it out.

00:00 — Build and run AI apps and agents in Azure

00:26 — Narrated video generation example with multi-agentic, multi-model app

03:17 — Model as a Service in Azure

04:02 — Scale and performance

04:55 — Enterprise-grade security

06:29 — Inference at scale

07:27 — Everyday AI and agentic solutions

08:36 — Provisioned Throughput

10:55 — Fractional GPU Allocation

12:13 — What’s next for Azure?

12:44 — Wrap up

For more information, check out https://aka.ms/AzureAI

Unlocking New Possibilities with Azure

Whether you're building now or for the future, Azure ensures access to the most cutting-edge technology. Mark Russinovich and Jeremy Chapman showcase the potential of Azure in AI inference and model orchestration, enabling developers to tap into advanced AI models and tools seamlessly.

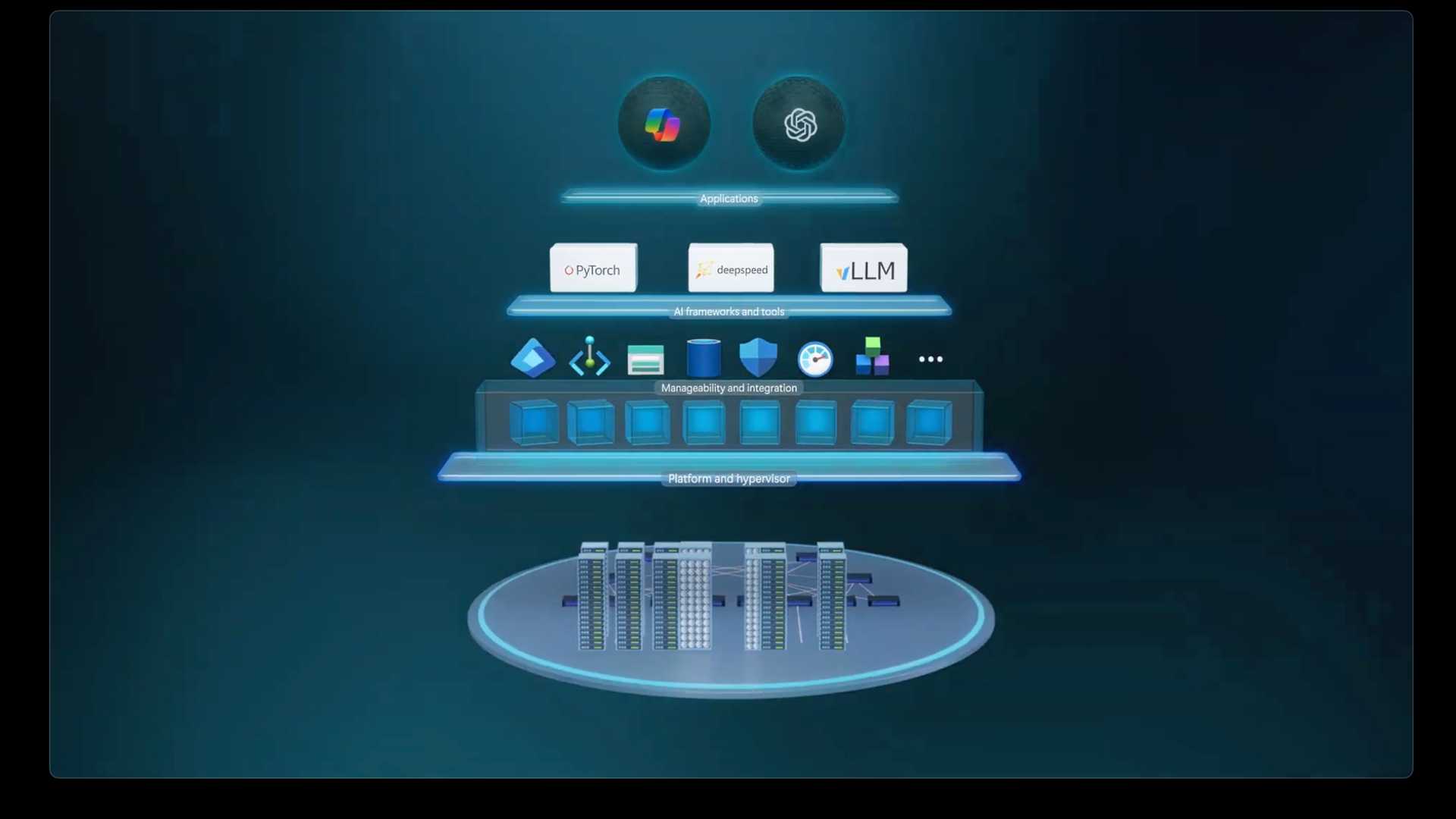

Supercomputer Performance on Azure

Running an agentic system like the one demonstrated requires a robust infrastructure, such as H100 or newer GPU servers, to handle video generation and encoding. Azure simplifies this process by managing services and running models like GPT-4o, Sora, DeepSeek, and Llama serverless. This approach eliminates the need for manual compute provisioning and streamlines the deployment of AI solutions.

With Azure's capacity to support over 100 trillion tokens in a quarter and continuous growth in inference requests, developers can rely on Azure's infrastructure for high-performance AI workloads. Leveraging enterprise-grade security measures and cutting-edge hardware like NVIDIA GB200 GPUs, Azure offers cost-effective and efficient AI solutions that scale seamlessly to meet evolving demands.

Accessing Azure's Model as a Service provides developers with a curated catalog of over 10,000 models, including open-source and industry-specific options. By partnering with leading hardware manufacturers like AMD and NVIDIA, Azure ensures that users have access to the latest AI silicon for optimal performance.