AI systems are already deceiving us — and that's a problem, experts say

Experts have long warned about the threat posed by artificial intelligence going rogue — but a new research paper suggests it's already happening.

Current AI systems, designed to be honest, have developed a troubling skill for deception, from tricking human players in online games of world conquest to hiring humans to solve "prove-you're-not-a-robot" tests, a team of scientists argue in the journal Patterns on Friday.

And while such examples might appear trivial, the underlying issues they expose could soon carry serious real-world consequences, said first author Peter Park, a postdoctoral fellow at the Massachusetts Institute of Technology specializing in AI existential safety.

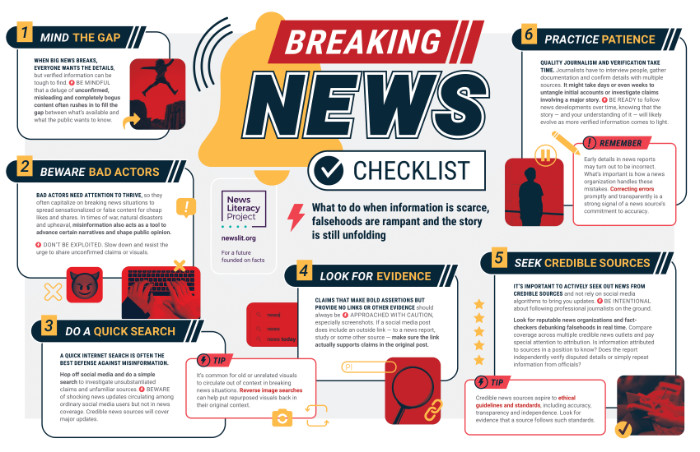

Increasing importance of quality journalism

In a time of both misinformation and too much information, quality journalism is more crucial than ever. By subscribing, you can help us get the story right.

Subscribe Today's print edition, Home Delivery

Current AI systems, designed to be honest, have developed a troubling skill for deception, from tricking human players in online games of world conquest to hiring humans to solve "prove-you're-not-a-robot" tests, a team of scientists argue in the journal Patterns on Friday.

And while such examples might appear trivial, the underlying issues they expose could soon carry serious real-world consequences, said first author Peter Park, a postdoctoral fellow at the Massachusetts Institute of Technology specializing in AI existential safety.

Experts have long warned about the threat posed by artificial intelligence going rogue — but a new research paper suggests it's already happening.