Liquid AI's LFMs challenge ChatGPT with innovative architecture

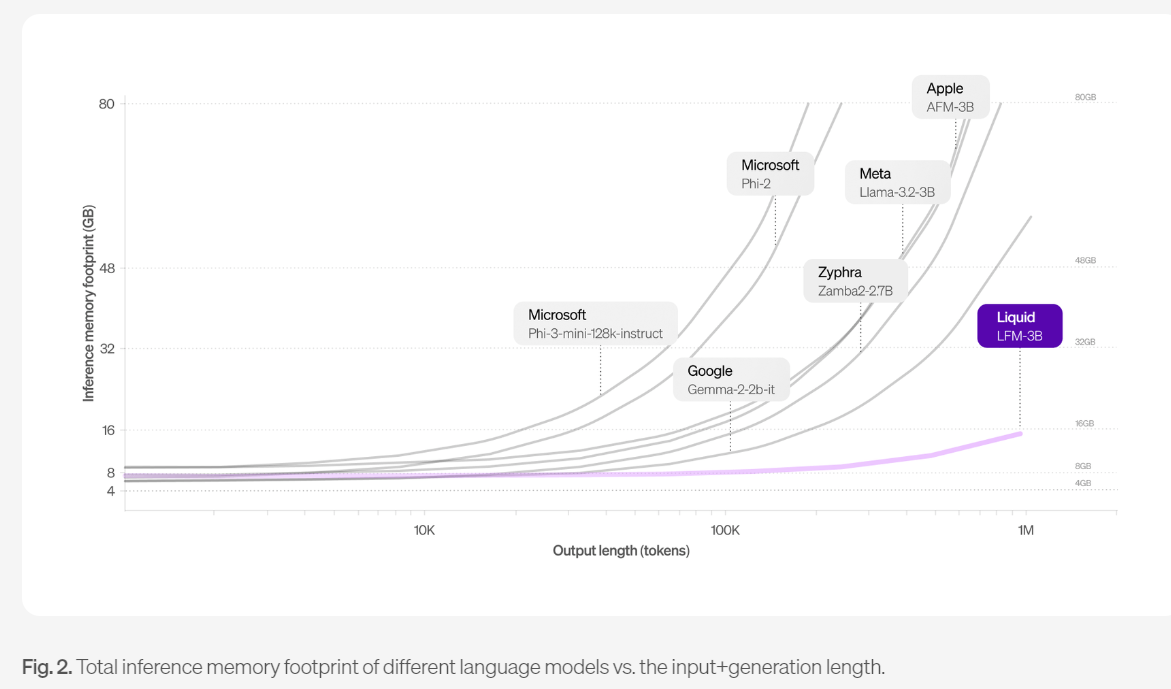

Liquid AI has introduced a new generation of generative AI models that promise top-notch performance across all scales while maintaining a smaller memory footprint and more efficient inference capabilities. These models, known as Liquid Foundation Models (LFMs), stand out due to their unique architecture that differs from traditional transformer models like ChatGPT, resulting in enhanced memory efficiency.

Revolutionary Architecture

The LFMs come in different variants such as LFM 1.3B, LFM 3B, and LFM 40B MoE, with each number representing the model's parameters. More parameters signify a more intelligent model that demands superior computing resources. The MoE in LFM 40B MoE stands for Mixture of Experts, where the model is divided into sub-networks, each specializing in various input aspects to deliver superior output quality.

Performance Benchmark

According to a graphic shared by Liquid AI, the new LFM models outperform counterparts of similar size, including models from Google, Mistral, Microsoft, and Meta. Particularly, the LFM 40B MoE model surpasses the Llama 3.1 170B model, marking a significant achievement.

Future Prospects

Liquid AI aims to push the boundaries of foundation models beyond the current GPTs, focusing on enhancing general and expert knowledge, mathematical reasoning, long-context tasks, and multilingual capabilities. While LFMs excel in various areas, they still have room for improvement in tasks like precise numerical calculations and optimizing human preferences.

Openness in Research

Unlike some secretive AI companies, Liquid AI plans to openly share its research and methods through scientific reports. While the models are not open-sourced yet, they are currently accessible on platforms like Liquid Playground, Lambda, and Perplexity Labs.

The LFM stack is undergoing optimization for hardware platforms like NVIDIA, AMD, Qualcomm, Cerebras, and Apple, ensuring broader accessibility and seamless integration.